Import data from Gen3 BDC and Gen3 AnVIL

Overview

The purpose of this feature is to allow you bring data to CAVATICA after creating a cohort on Gen3 BioData Catalyst or Gen3 Anvil which includes DRS URIs and a subset of metadata from the PFB file.

In addition, the PFB file that was used as a manifest is also imported into your project since it contains all available data.

The procedure consists of:

- Selecting and exporting data from BioData Catalyst powered by Gen3 or Gen3 Anvil.

- Connecting Gen 3 Biodata Catalyst or Gen3 AnVIL account to your account on CAVATICA. This step occurs the first time you land on the Platform after exporting data from either Gen 3 BDC or Gen3 AnVIL.

- Importing data into a controlled project on the CAVATICA.

- (Optional) If you want to use raw clinical data in your analyses, unpacking and converting Avro files to JSON.

Selecting and exporting data from BioData Catalyst powered by Gen3

This step takes place onBioData Catalyst powered by Gen3.

Before you can import the data into your project, you first need to export it from BioData Catalyst Gen3 by following the procedure below:

- On the top navigation bar click Exploration.

- In the pane on the left, select the Data tab.

- Use the available filters in the Filters section to select patient data of your interest.

- When you're done with filtering, click Export to Seven Bridges and choose option Export to CAVATICA to start the export process.

Selecting and exporting data from Anvil powered by Gen3

This step takes place onAnvil powered by Gen3.

Before you can import the data into your project, you first need to export it from Anvil Gen3 by following the procedure below:

- On the top navigation bar click Exploration.

- In the pane on the left, select the Data tab.

- Use the available filters in the Filters section to select patient data of your interest.

- When you're done with filtering, click Export to Seven Bridges and choose option Export to CAVATICA to start the export process.

Along with the files you have selected, a PFB (avro) file will also be exported which may contain additional information.

Importing data into a project on CAVATICA

This step takes place onCAVATICA.

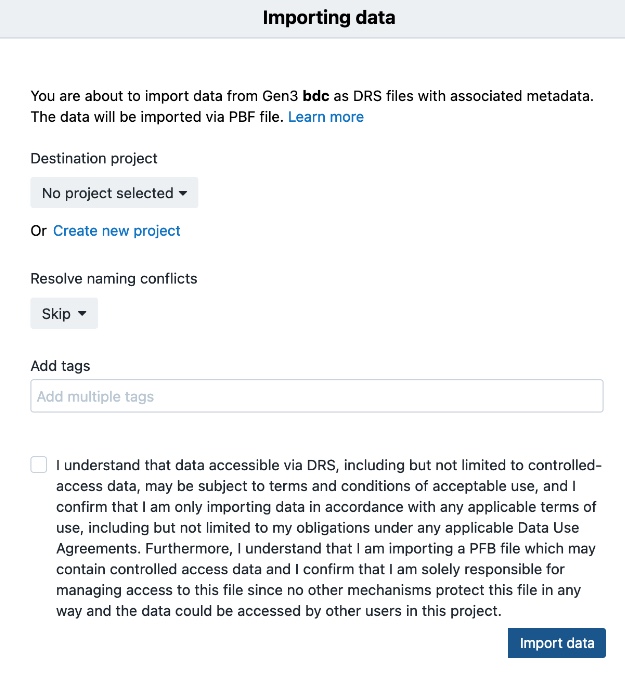

After using the export feature, you will land on a page for setting up the import to the Platform (further discussed below).

- Select the project you want to import the data to (Important note: this data can only be imported to a controlled project). Alternatively, you can create a new project and upload the files there.

- (Optional) Add tags for the files.

- (Optional) Choose the method for resolving naming conflicts in case files with the same names already exist in your project.

- Click Import Data.

The files will be imported. The notification in the upper right corner contains information on the exact number of files what were uploaded. You will be able to monitor the progress. This action may take up to 5-10 minutes to complete depending on the size of the file.

To use data found from PFB (avro) in your analyses, the data will first need to be extracted from archives and then converted to JSON, following the procedure below.

Along with the files you have selected, a PFB (avro) file will also be exported which may contain additional information.

Extract and convert Avro files to JSON

This step takes place in Data Studio on CAVATICA.

- Access the project containing the data you imported from BioData Catalyst powered by Gen3.

- Open the Data Studio tab. This takes you to the Data Studio home page. If you have previous analyses, they will be listed on this page.

- In the top-right corner click Create new analysis. The Create new analysis wizard is displayed.

- Name your analysis in the Analysis name field.

- Select JupyerLab as the analysis environment.

- Keep the default Environment setup. Each setup is a preinstalled set of libraries that is tailored for a specific purpose. Learn more.

- Keep the default Instance type and Suspend time settings.

- Click Start. The Platform will start acquiring an adequate instance for your analysis, which may take a few minutes.

- Once the Platform has acquired an instance for your analysis, the JupyterLab home screen is displayed.

- In the Notebook section, select Python 3. A new blank Jupyter notebook opens.

- Start by installing the fastavro library:

!pip install fastavro- Import the reader feature from fastavro and import the json Python library:

from fastavro import reader

import json- Unpack the gzip file and save it in the Avro format. Make sure to replace

<gzip-file-name>in the code below with the name you want to use for the unpacked Avro file. The/sbgenomics/project-files/path is the standard path used to reference project files in a Data Studio analysis.

!tar -xzvf /sbgenomics/project-files/<gzip-file-name>.avro.gz- Use the reader functionality of the

fastavrolibrary to read the Avro file and assign its content to thefilevariable. Make sure to replace<avro-file-name>with the name of the Avro file that was extracted from the gzip archive in the previous step.

file = []

with open('<avro-file-name>.avro', 'rb') as fo:

avro_reader = reader(fo)

for record in avro_reader:

file.append(record)- Save the content to a JSON file. Make sure to replace

<json-file-name>with the actual name you want to use for the JSON file.

json_file = []

with open('<json-file-name>.json', 'w') as fp:

json.dump(file, fp, indent=2)- Finally, save the JSON file to the

/sbgenomics/output-files/directory within the analysis. When you stop the Data Studio analysis, the JSON file will be saved to the project, and will be available for further use in tasks within that project.

!cp <json-file-name>.json /sbgenomics/output-files/Updated 3 months ago