Project and file locations (Multi-cloud)

Overview

Continuous decreases in sequencing costs have resulted in exponential increases in amount of data being generated by the NGS technology. Due to its high volume, this data is usually stored in the cloud, which makes downloading/moving those files an extremely expensive operation.

In order to optimize task execution on CAVATICA and its costs, it is important to understand the basics about locations where your files are stored and where the execution takes place.

CAVATICA currently works with two cloud providers, Amazon Web Services (AWS) and Google Cloud Platform (GCP), both of which are available for file storage and computation purposes. Each of the cloud infrastructure providers has cloud resources hosted in multiple locations across the world, called regions.

If you store your files either on AWS and GCP US regions, CAVATICA offers the ability to manage all your work from a single space and spinning up chosen computation resources where your data lives, thus providing much easier cost optimization of your work.

In order to use files as inputs for a task on CAVATICA, the files need to be either 1) uploaded/imported to CAVATICA; 2) accessed from a mounted Amazon Simple Storage Service (S3) or Google Cloud Storage (GCS) bucket; or 3) accessed from our publicly available files (Public Reference Files, Public Test Files and available datasets).

The files may or may not be in the same location as the computational instance used to process them - they could be in different regions or even on different cloud providers. Costs are most optimized when the files are in the same location as the computational instances, since there will be no additional data transfer costs and the files can be used directly from the location where they are stored.

However, if these locations are in different regions or belong to different cloud providers, there will be additional data transfer costs, since the files will need to be transferred from the location where they are stored to the location where the computation is done. This data transfer is charged to the user by the cloud infrastructure provider (AWS or GCP).

Seven Bridges provides full transparency of data transfer costs charged by cloud infrastructure providers and allows you to optimize them by defining your project location as described below. For a complete list of data transfer prices, please refer to Amazon S3 Data Transfer Pricing and Google Cloud Storage Network Pricing.

Project locations

When creating a project, you need to define the location, specifically cloud provider and region, where the tasks in the project will be executed. The available options are:

- aws:us-east-1 - AWS US East (N.Virginia)

- google:us-west1 - GCP US West (Oregon)

The region you select will be the location where all the computation for your project will take place. This includes both running tasks and Data Studio analyses. Also, all file uploads will end up at this location (analysis outputs, user uploads and imports). This option is used to help you organize your workspace in the most cost-optimal way, leaving you the control over execution and file organization.

All data uploaded to CAVATICA or generated from analyses on CAVATICA prior to availability of multiple project locations is stored in the Amazon US East (aws:us-east-1) region. This will remain the default project location. When creating a project, you should keep the location setting as is (aws:us-east-1), unless you plan to do extensive analyses on data you might have in the GCP (google:us-west1) region.

File locations

In order to use files as inputs for a task on CAVATICA, the files need to be:

- Uploaded/imported to CAVATICA. When uploading files to a project on CAVATICA, they will be uploaded to the location (cloud provider and region) selected as the project location on project creation. This allows for cost optimization as all analyses (tasks and Data Studio) will be executed on computation instances at the same location, thus causing no additional data transfer costs.

- Accessed from a mounted Amazon Simple Storage Service (S3) or Google Cloud Storage (GCS) bucket. CAVATICA allows you to mount your own external bucket where you store the files that you want to use as inputs for an analysis. In this case, there will be additional data transfer costs if your files are hosted at a different location from the one defined for your project. See below for details.

- Used from publicly available files (Public Reference Files, Public Test Files and available public datasets). Files that are available through the Public Reference Files and Public Test Files repositories, as well as public datasets on CAVATICA, will not cause additional data transfer costs, regardless of your project's location.

- Generated as outputs from a previously completed task within the same project. Task outputs are stored at the defined project location and will not cause any additional data transfer costs when used as inputs in a different task within the same project.

File transfer costs

Additional file transfer costs charged by the cloud infrastructure provider (AWS or Google) can happen in the situations described below.

Using files copied between projects that are in different locations

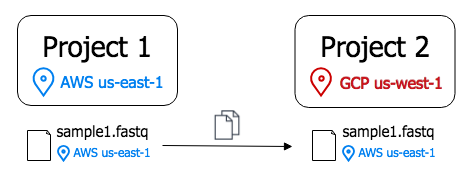

When you copy a file between projects that are in different locations, the file will not be physically copied to the target project's location. Instead, it will be used from the location where it was originally uploaded to, as shown in the diagram below:

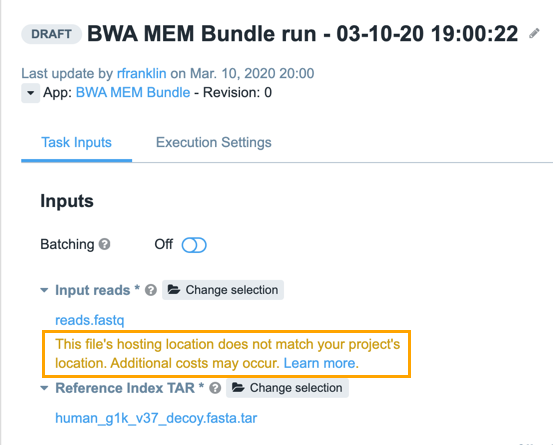

When you set such a file as an input in your task, a warning will be displayed below the input saying that the location of the file is different from the project location. Please be aware that this will cause additional costs as the file will need to leave the region or cloud provider where it is stored, to be brought to the computation instance where it will be processed.

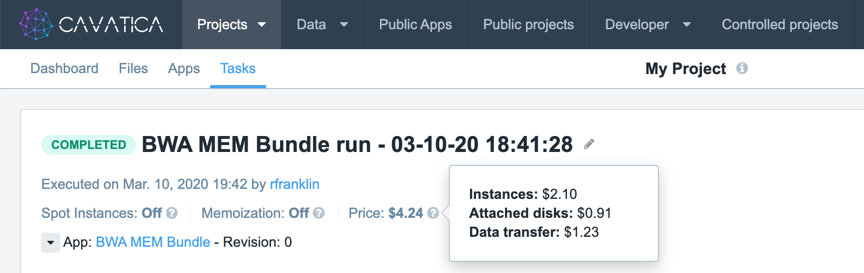

When a task containing such files is completed, the cost of data transfer is included in the total costs of task execution and will be charged together with the task price. Data transfer cost will be shown as a separate item in the task price tooltip on the task page.

Seven Bridges provides full transparency of data transfer costs charged by cloud infrastructure providers and passes them through with no extra charge or fee. For a complete list of data transfer prices, please refer to Amazon S3 Data Transfer Pricing and Google Cloud Storage Network Pricing.

Using files from mounted AWS S3 or GCS buckets

Another option that might cause data transfer costs is the use of files from mounted cloud storage buckets. If your bucket is in a location that is different from the location of the project in which you want to use the files, when executing an analysis within the project, your files will need to be transferred to your project's location, incurring data transfer charges, as this is where your computation will take place.

In this case, there will be additional file transfer costs charged directly to your account with the cloud storage provider. Also, when using such files as inputs for a task, CAVATICA will not display a warning about the location of the input file being different from the project location.

To optimize your task execution costs, when creating a project and planning the analyses that will be executed within that project, please keep in mind where your input files are stored and choose a project location that matches the location of your files, if possible.

Also, please keep in mind that the location of a project cannot be changed once the project has been created.

When exporting a file from CAVATICA to an attached volume, export is possible only to a volume that is in the same location (cloud provider and region) as the location of your project. Therefore, exporting to a volume will not cause any additional file transfer costs, as the transfer will take place within the same location.

Updated less than a minute ago